PBS Tidbit 14 of Y: Coding with AI

In a recent instalment of Allison’s Chit Chat Across the Pond podcast, we discussed my experiences with AI in general and promised deeper dives into coding with AI and working on the terminal with AI. This is the first of those promised deeper dives (the second will appear as an instalment of Taming the Terminal sometime soon).

Matching Podcast Episodes

You can also Download the MP3

Read an unedited, auto-generated transcript with chapter marks: PBS_2025_10_25

Some Context

My AI Journey began in work where I agreed with my manager that I would make time in 2025 to experiment with AI so I’d have the needed first-hand experience to have meaningful discussions around the topic in my role as a cybersecurity specialist. Because of this work setting, I couldn’t just do whatever I wanted; I needed to comply with all our policies, most importantly, our data protection policies. With my programming hat on, that meant confining myself to the enterprise versions of Microsoft’s Copilot suite, specifically the general-purpose Microsoft 365 chatbot in my Edge sidebar, and GitHub Copilot in VS Code.

It’s almost been a year now, and I’ve really come to enjoy having these two Copilots help me with my various bits of coding work. This year, that’s meant getting some assistance while working with:

- Some Excel formulas

- Some JSON processing with

jq - A little shell scripting with Bash

- A lot of data processing and dashboard creation in Azure Log Analytics Workspaces with KQL

- A lot of PowerShell scripting

- And even more Linux automation with Ansible, which involves:

- Lots of YAML files defining custom Ansible components like roles and playbooks

- INI files defining inventories

- Jinja2 template strings all over the place

If that sounds like a lot of different technologies and a lot of confusing context switching, you’d be absolutely right!

Earlier in my career as a dogs-body sysadmin, I generally got to immerse myself deeply in just one or two languages. Initially, Bash and Perl, then later NodeJS JavaScript, which meant almost everything I needed was generally fresh in my mind, and I could work away with little need to reference documentation. But in my current role, I need to jump from tool to tool multiple times a week, or even a day. That kind of context switching gets really confusing, really fast. I still have lots of knowledge and experience buried deep in my brain, but I need some help triggering the right memory at the right time, and having the appropriate CoPilot sitting right there in my sidebar has proven invaluable!

I really like Microsoft’s naming choice here, because there is simply no way these AIs are even close to replacing skilled humans working on real-world mission-critical code, but they are capable of providing valuable assistance to help those skilled humans be more effective. Think of them as force multipliers, not replacements.

Big-Picture Advice

Large Language Models, or LLMs, are very much their own unique and special indescribable thing. But, when coding, I suggest you keep two abstractions in mind — think of the them as averaging and word association machines.

LLMs are Averaging Machines

Our various bots have been trained on millions of lines of other people’s code, and will produce code that’s about as good as the average line of code out there. Sometimes average is more than you need, but sometimes it really isn’t!

When you’re doing something quick and dirty, it’s probably just fine. If the code the bot spits out does the one thing you need to do in the moment, great! Job done, move on with your day.

But when you’re working on a production code base that’s going to be deployed to the real world, dealing with real data for years to come, average is not nearly good enough! In that kind of situation, you need to treat everything the LLM produces as a starting point to build from.

You need to check the real meaning of every argument, parameter, flag, etc., to be sure it does what you think it does. You should also assume it’s going to miss a bunch of edge cases, so you probably need to add some additional checks around the suggested code. And, you should assume it won’t fail gracefully when it meets the real world, and add all missing error handling.

LLMS are Word Association Machines

When you’re working near the edges of your understanding, search engines can be infuriating to use because when you ask vague, generic questions, you get utterly useless results.

LLMs, on the other hand, can leverage their abilities to associate those same vague, generic words with at least some relevant information, allowing them to give you at least somewhat helpful responses.

But, that does not mean that using the correct jargon is not important. On the contrary, the more accurately you use the appropriate jargon, the higher the quality of the information these robots will associate with it, and the more helpful and correct their replies will be.

When you don’t know the right words to use, take cues from the helpful parts of the early answers in the conversation. Ask for clarifications between similar-sounding words, and fine-tune your responses to adopt ever more of the correct jargon.

Conversations, not Questions

Speaking of conversations, LLMs are not search engines; they’re chatbots! Unlike 2025 Siri, they do remember what you just said! Yes, they’re excellent alternatives for search engines when you’re trying to get conceptual answers rather than find specific resources, but they’re not drop-in replacements; you need to interact with them differently. Don’t treat the chat box like a search box — treat your initial question as a conversation starter, and don’t accept their first response as the real answer. Ask follow-up questions — if the response is clearly off base, reply explaining why this is not what you need. If you don’t understand some or all of the answer, ask for clarifications. If you’re not sure if some suggested code will handle some specific edge case, ask!

Another useful thing to do is to ask for links to relevant parts of official documentation. If the conversation has led you to believe a specific library, class, or function is the best fit, ask for a link to the docs so you can really understand what the library, class, or function actually does. This is another way to ensure the library, class, or function actually exists and the chatbot hasn’t hallucinated it into existence. (Yes, that happens, especially in languages and libraries with consistent naming schemes for things.)

Favour Chatbots that Show Their Sources

If you have the choice between two LLMs, one that doesn’t show you links to the sources it used to construct its reply, and one that does, use the one that does! Firstly, the actual referenced website is a great initial indicator for the response’s quality — if you’re asking about PowerShell and the sources are at learn.microsoft.com, there’s a good chance they’re accurate, but if they’re simply based on reddit.com posts, increase your skepticism! But more than that, when the answer to your question is mission-critical, take the time to open the linked sources and verify the function calls, argument names, or whatever for yourself.

Context is King

Now that you’re thinking in terms of conversations and the tools having a memory, let’s loop back to that first abstraction — the fact that LLMs act as averaging machines. The context you give the LLMs biases what it is they average against.

In the context of a chat interface, that means code snippets, technologies, libraries, classes, functions, and whatnot mentioned earlier will be weighted more highly in the averages that appear as responses.

But LLMs don’t just chat; they can also offer short code completions and even suggest entire sections of code as you type. These suggestions are also influenced by context, but that context is coming from the tool they’re integrated into.

When you use an AI-enabled development environment (IDE), it considers the context of the code around the code you’re working on — your current file, all your open tabs, and, in many tools, all the code in the currently open project. This means that if you have a consistent coding style, the suggestions will start to sound ever more like your own code. The bigger the project you’re working on, the better the suggestions get! So, don’t experiment with toy projects, experiment with those ancient codebases that go back decades that no one person fully understands; that’s where the tools can give the absolute most value!

The Advantages an IDE Integration Brings

As I’ve just hinted, one of the biggest advantages integrations bring is automatic context — you don’t have to tell the AI which tools you’re using, because the AI sees your code already!

Not only can you save yourself some prompting, but you can also refer directly to specific parts of your code more easily. You could ask ‘why does line 42 give a divide by zero error?’, but you don’t even have to be that explicit, you can simply select the line or lines your question relates to and refer to it as simply ‘this’!

In terms of chatting, you get free context and quick and easy references to specific chunks of code. But what about the replies? Can you do more with those, too? Of course you can! When the bot returns suggested code, you get buttons to copy the code, insert the code at your cursor, or, if the suggestion is a change, an option to automatically apply the change to your file or files! That last option sounds really scary, but VS Code’s GitHub Copilot integration does it in a very explicit and safe way. It shows you what it will remove in red, what it will add in green, and gives you accept and reject buttons for each proposed change.

But you get value from an AI-enabled IDE without even enabling a chat box, thanks to dramatically more powerful code completions. Rather than just offering completions for the word you're currently typing, the AI will offer entire sections of code, including comments! Even when the suggestions are not perfect, it’s often much less work to tweak a few details than it is to have to type it all out from scratch!

An Important Warning — NEVER INCLUDE SECRETS IN YOUR CODE

It's always been bad practice to embed secrets into code. That goes double for code committed to remote repositories, and triple for code shared with an AI as context. If you’re going to use AI coding tools, never ever ever include secrets of any kind (passwords, private keys, API keys, authentication tokens, etc.) in your code!

Different languages and environments have different best practices for secret management, so the real advice is to read the relevant documentation for the technologies you’re using! But having said that, I will offer one piece of generic advice — most languages support environment variables, so they are often the right answer. In general, I pass my secrets to my scripts and apps using environment variables.

Some Anecdotes from AI Programming Adventures

These are not some kind of definitive list of best examples, but rather some anecdotes that caught my eye at a point in time when I had the time, energy, and presence of thought to grab them. These anecdotes don’t prove anything, but they do add colour to my thoughts above.

Example 1 — Coding Help From a Regular Chatbot

As a first example, here’s a recent positive experience I had using just the regular Microsoft 365 Copilot Edge sidebar to get some useful coding help.

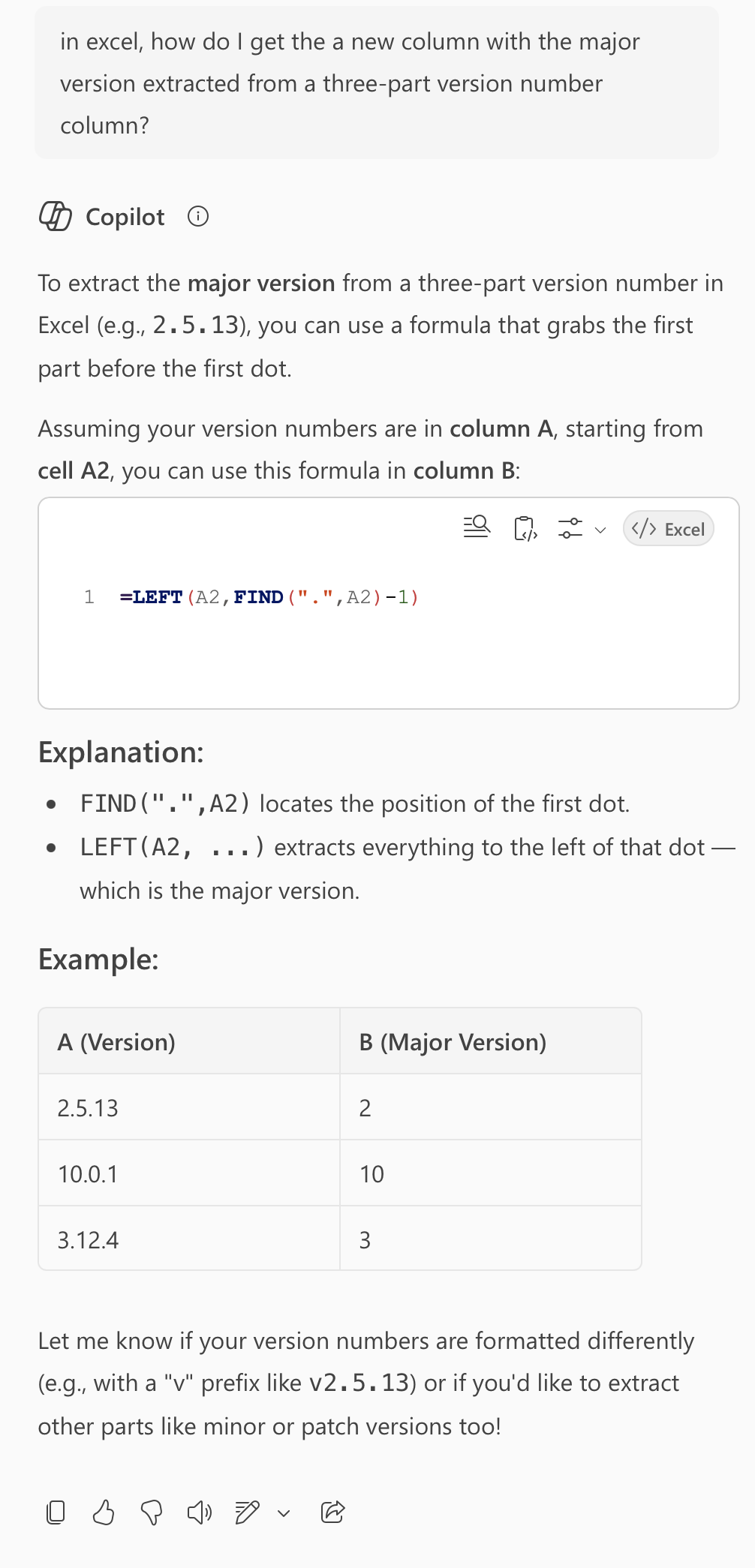

To set the scene — I had a CSV export from a reporting tool that listed a whole bunch of rows with macOS version numbers as major, minor, and patch numbers separated by dots, e.g., 15.6.1, and I needed to build some new columns to start aggregating the results into more useful buckets. First, I needed the major version as a column. I know Excel can split strings, but I couldn’t remember the syntax, so I asked Copilot to help me with the formula. Since I was using a generic chatbot, I was careful to give all the needed context in my question — “in excel, how do I get a new column with the major version extracted from a three-part version number column?”:

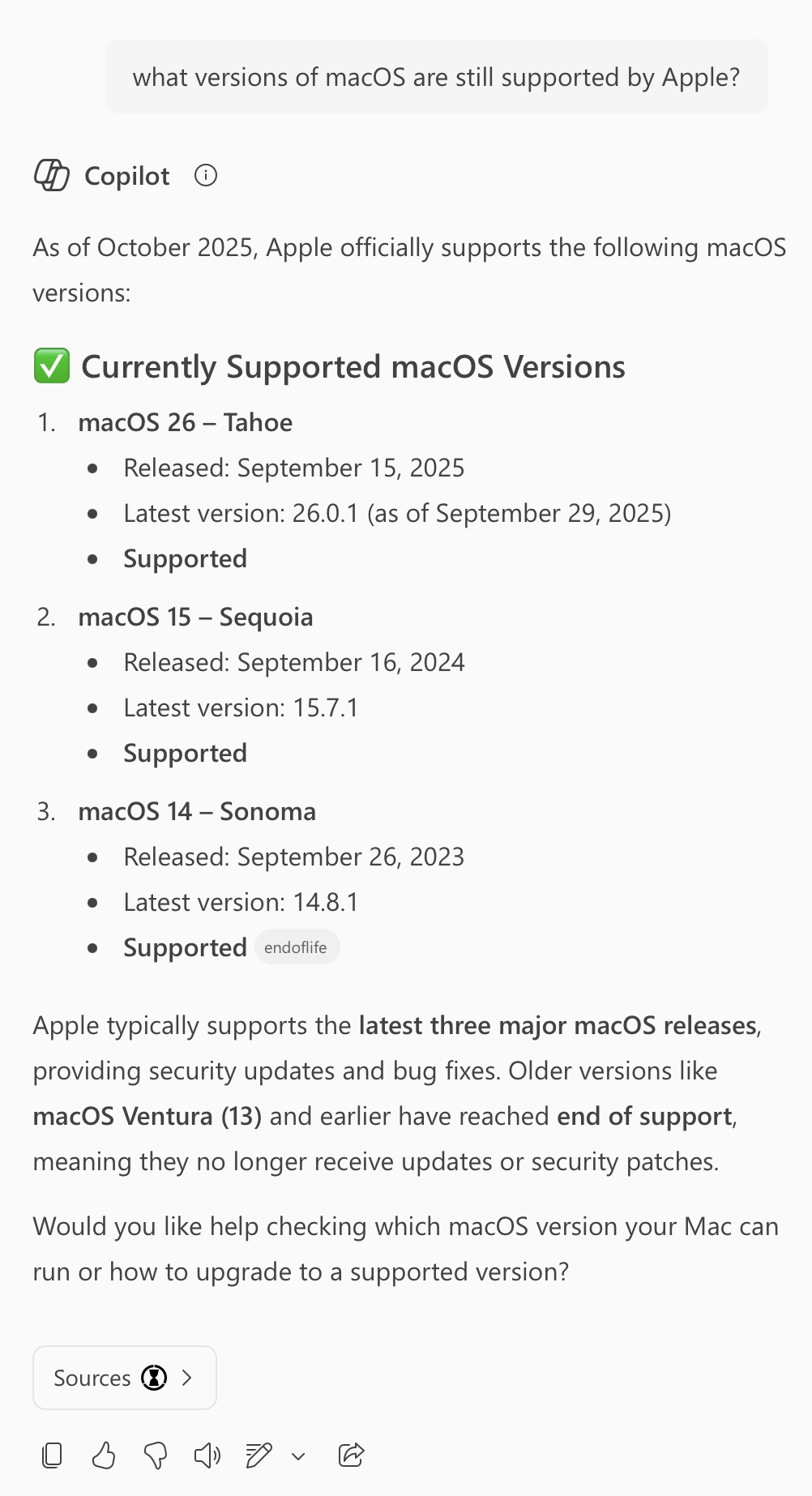

My data was in a different row, so I needed to change the coordinates in the formula, but the suggested formula worked perfectly. I now had my new column with a major version number. That gave me a better feel for my data, but then I realised my real question was not what the major version number was, but whether the version in question was supported. At this stage, I’ve utterly lost touch with what versions Apple does or does not support, so I started a new chat and asked, “what versions of macOS are still supported by Apple?”:

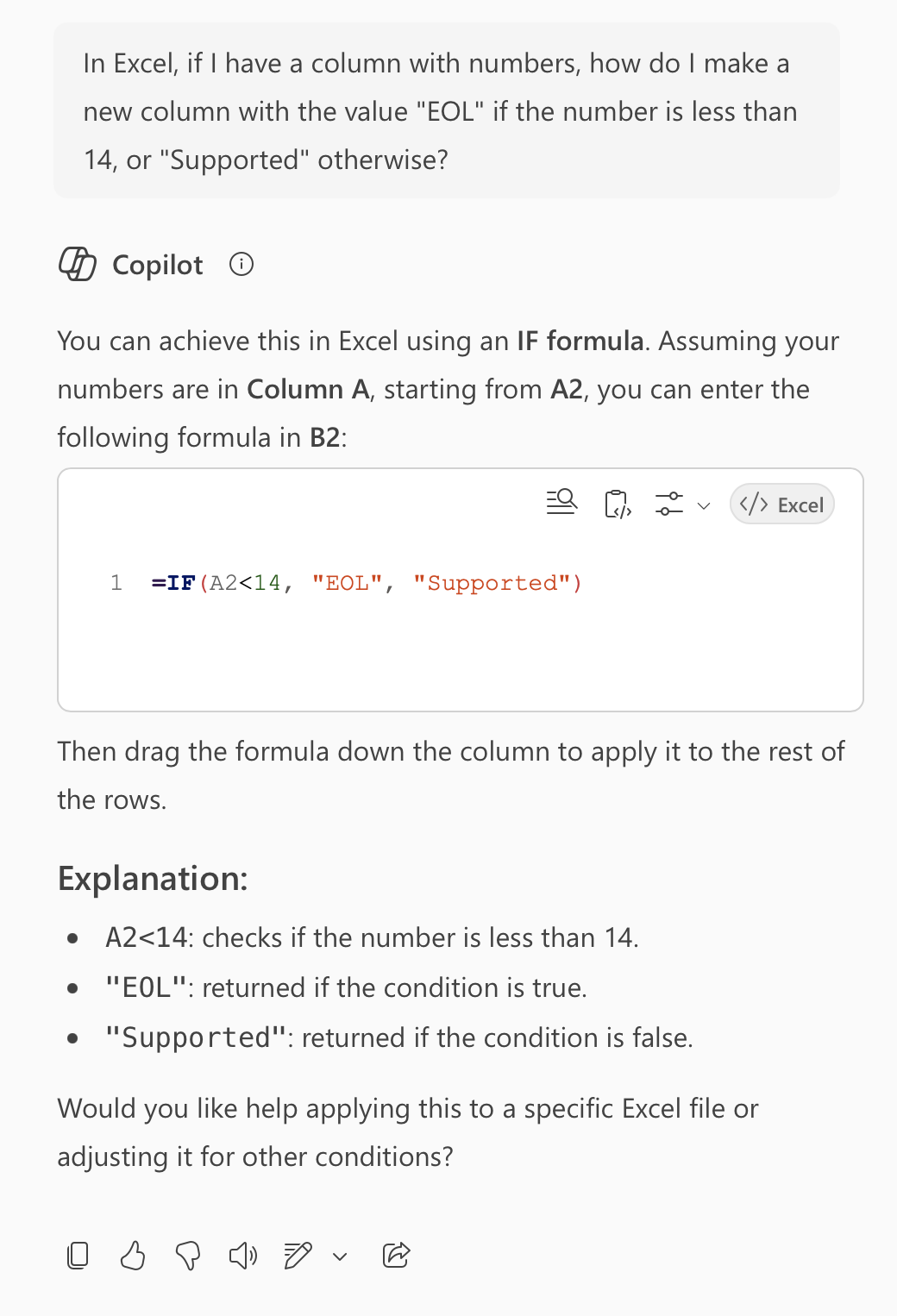

OK, so major versions older than 14 are obsolete. My final step was to build a second new column marking each row as obsolete or supported, so I started one more chat with the clear question “In Excel, if I have a column with numbers, how do I make a new column with the value “EOL” if the number is less than 14, or “Supported” otherwise?”:

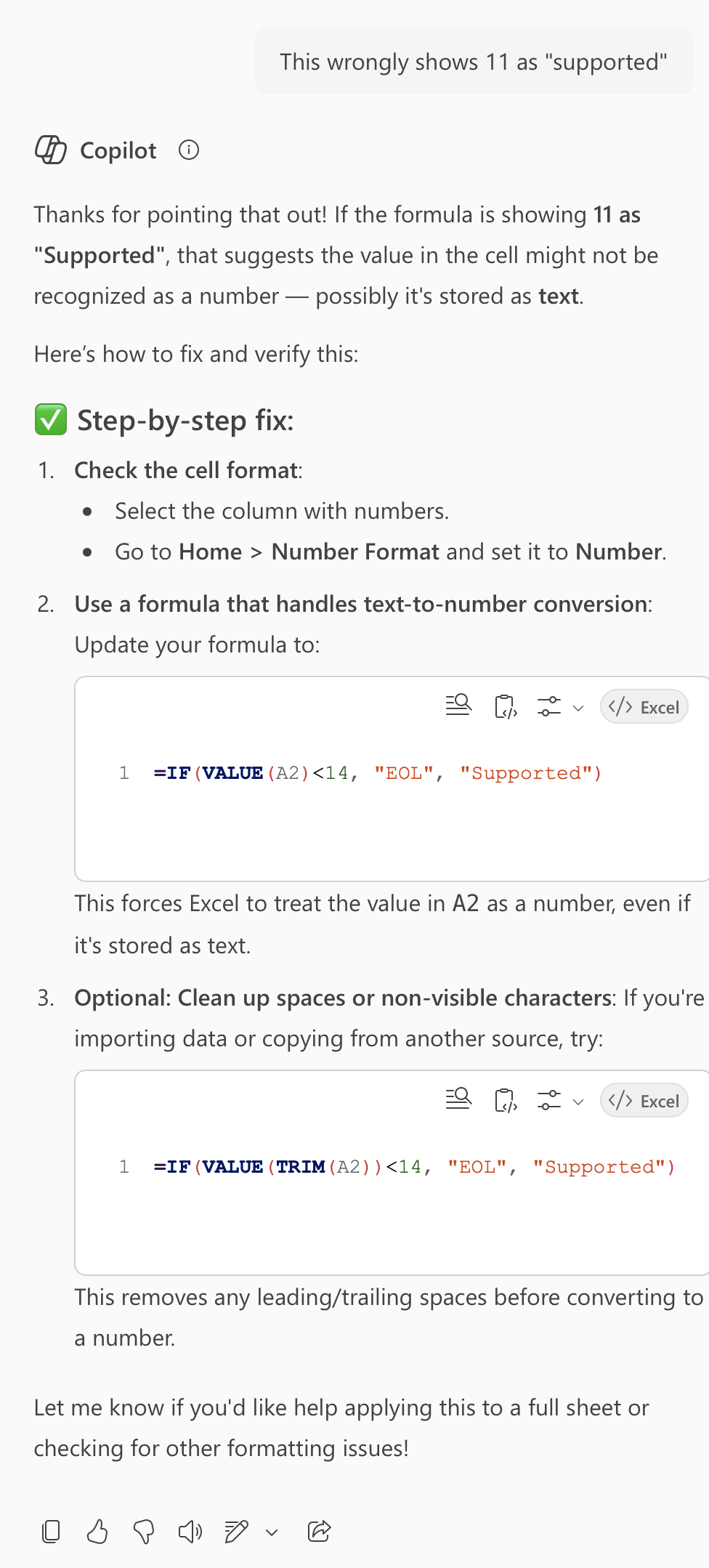

The suggested formulate looked plausible to me, so I pasted it in and found that all versions of macOS were showing as Supported, even macOS 11 … hmmm … that’s not right! So, I continued the same conversation with a nice clear followup — “This wrongly shows 11 as “supported”“:

OK, so the first suggestion was so simple I’d actually already tried it before asking my followup question. I decided to skip right to the most robust possible third option (=IF(VALUE(TRIM(A2))<14, "EOL", "Supported")) and it worked perfectly! I could now conditionally format my spreadsheet to show obsolete OSes in a scary red colour, and supported OSes in a reassuring green colour 🙂

Example 2 — Copilot Hallucinates, and may not be Best for Generic Programming Questions

Let’s start by throwing some cold water on the AI hype and scare-mongering about replacing actual programmers with a prompt. Maybe some day, but not today or tomorrow!

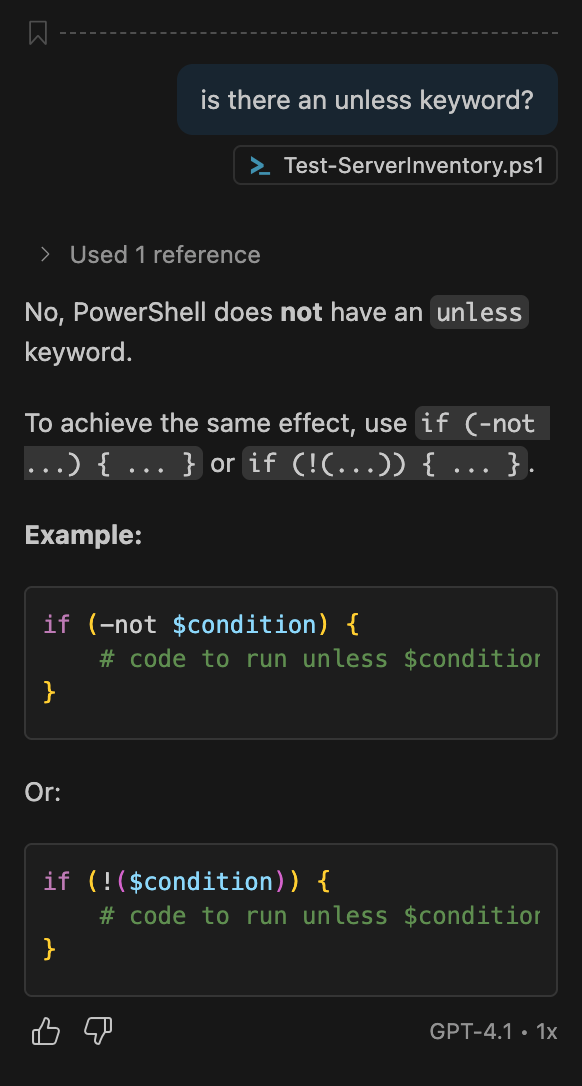

To set the scene, I needed to go back and add a new feature to an existing PowerShell script. Specifically, to add support for a -Quiet flag to suppress all output that’s not an error or a warning. That meant implementing the human logic ‘unless in quiet mode, print …’ repeatedly. I like to write my code in the most English-life way the syntax allows, and I know some language have an unless keyword that acts like ‘if not’. When it’s available, I really like to use it, but I couldn’t remember if PowerShell was or wasn’t one of the languages that supports this keyword.

Because this script is versioned in Git, I was working in VS Code, so I the obvious place to ask my question was the GitHub Copilot sidebar. Because of the free context, I didn’t need to tell GitHub that I was asking it a PowerShell query, so I could simply ask “is there an unless keyword?”

Copilot broke that bad news that there is no such feature in PowerShell, but did helpfully show me how to combine if and -not to achieve the same results.

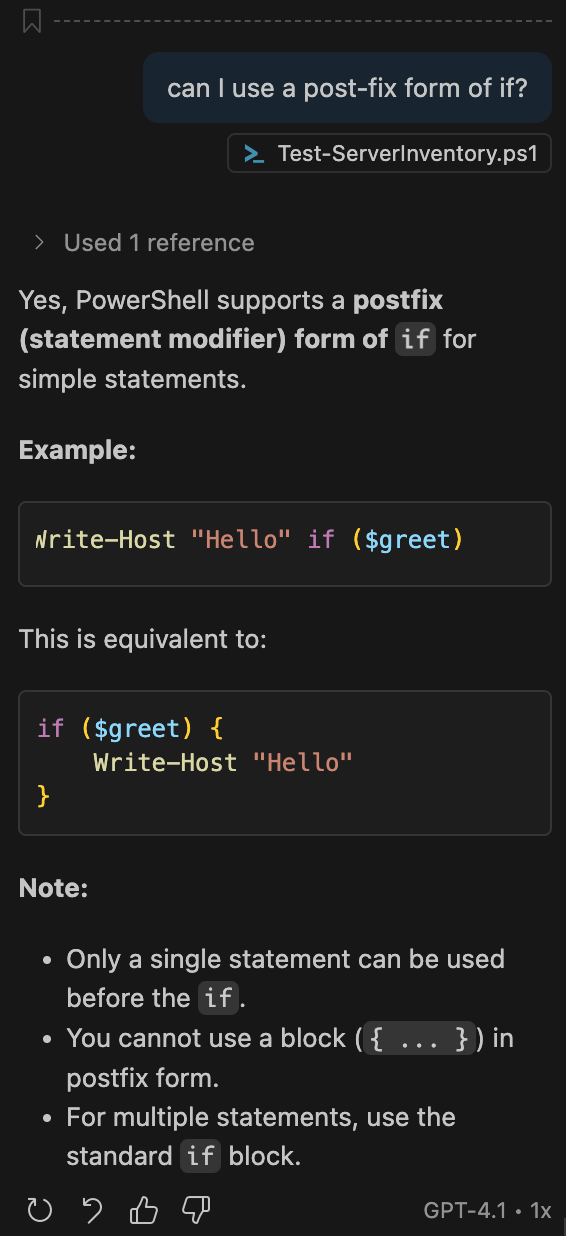

Fine, but I don’t want to have three lines of code for each print statement, or, to have the text being printed so far off to the right that bits of it would be hidden till I scrolled, so I had a followup question. Another feature some language support is a post-fix form of the if statement. That is, a special variant of if when you want to control the execution of just a single statement rather than a code block, and where the statement comes before the condition. This can make some statements read in a more English way, so I used it a lot when I was working in Perl, for example:

print "some mesasge" if ! $QuietModeEnabled;

So I continued my conversation with a simple followup — “can I use a post-fix form of if?”.

Copilot confidently told me I could, and gave me an example code snippet that looks just like what I was hoping would work, yay!

So I updated my script, and then tested my new -Quiet flag, and not only did it still print all the output I was trying to suppress, it also printed if True after ever line of output. Huh?

Turns out Copilot hallucinated the entire feature! What the code samples, and hence my updated code actually do is pass three arguments to PowerShell’s Write-Host command — the string I was trying to conditionally print, the string 'if', and the Boolean result of evaluating the condition, which should indeed be True when the-Quiet flag is set!

Out of curiosity I asked my favourite privacy-protecting stand-alone chatbot, Lumo, if PowerShell supported a post-fix form of if, and it quite correctly told that it does not, and suggested I just use the regular pre-fix form, which is what I ended up doing!

If you don’t believe AIs hallucinate, you might want to know about the study that OpenAI conducted that determined that hallucinations are a mathematical certainty: openai.com/…

Example 3 — A Bit of Everything

In this example, the exact syntax and what it means, is much less important than the AI interactions, so don’t stress about the minutia of what it is I needed to do.

With my work hat on, our tool of choice for managing the setup of our fleet of a few hundred Linux servers is Ansible. Ansible is an implementation of infrastructure as code philosophy. With Ansible you use a collection of YAML, INI, and JSON files to define the reality you wish to be true, and Ansible figures out how to make it so.

Imagine you need to host a large web app; you could use Ansible to define a list of needed VMs, say:

- 15 web servers

- 3 MySQL servers

- 2 load balancers

For each of those you would then define a role that would specify things like:

- The list of RPM/DEB packages that need to be installed

- The various configuration files needed to set the server up

- The list of Git repos to be checked out onto the servers

All of this is captured in plain text files that can themselves be committed to Git, hence, the term infrastructure as code.

I was busy creating a new Ansible role to capture the needed setup to deploy automatically-updating TLS certificates from a new Certificate Authority using the ACME protocol (similar to Let’s Encrypt, but with additional enterprise-focused features). As usual I was working in VS Code with GitHub Copilot enabled, and since our Ansible Git repo already has tens of custom roles defined within it, the code completions it was offering were excellent, and it was saving me a lot of typing and time.

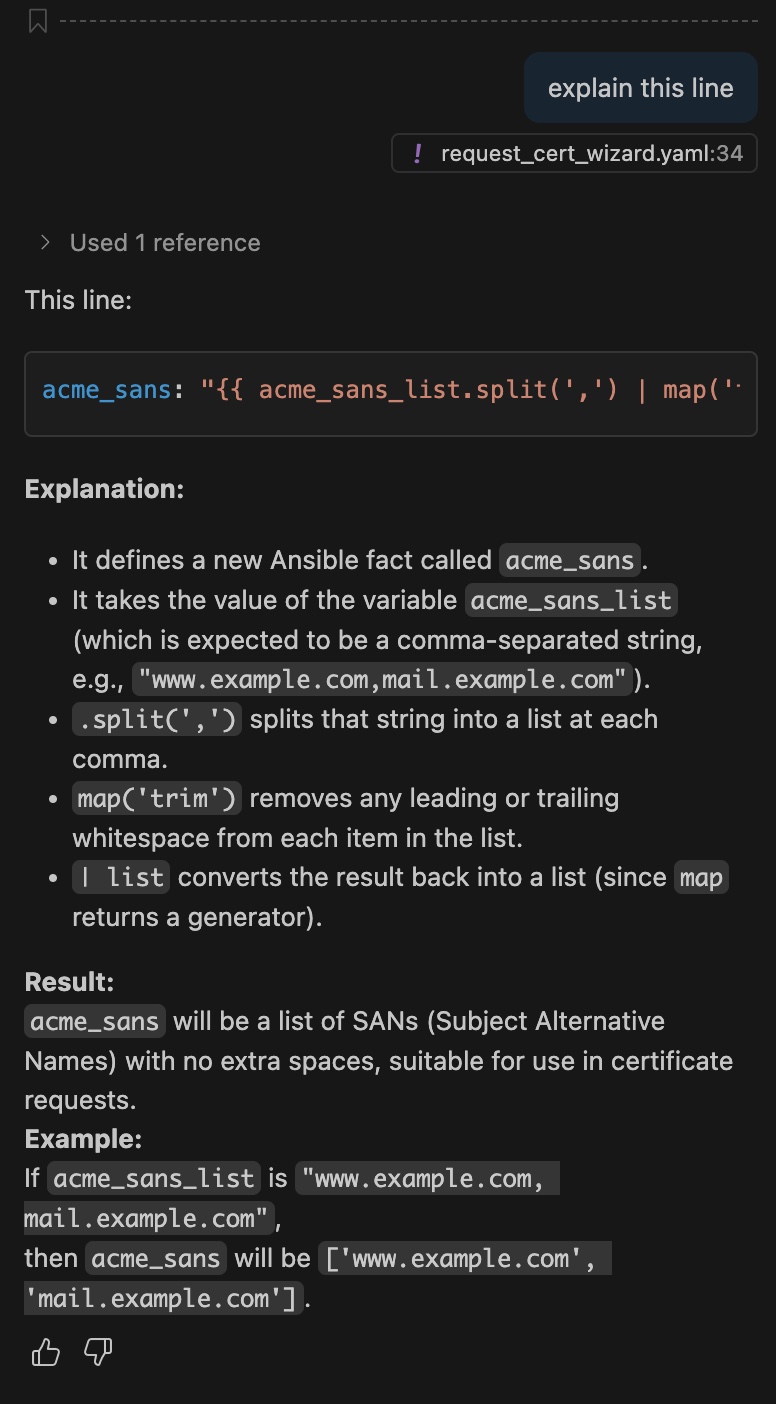

At one point, GitHub Copilot’s code completion offered me about ten lines of code, nine of which made perfect sense to me, and fit our various conventions perfectly, but one line used an approach that was new to me, so I selected it, and literally asked GitHub Copilot to “explain this line”:

This made perfect sense to me, and the example showed it would do exactly what I needed — convert a comma-separated list of domain names into an array of domain names.

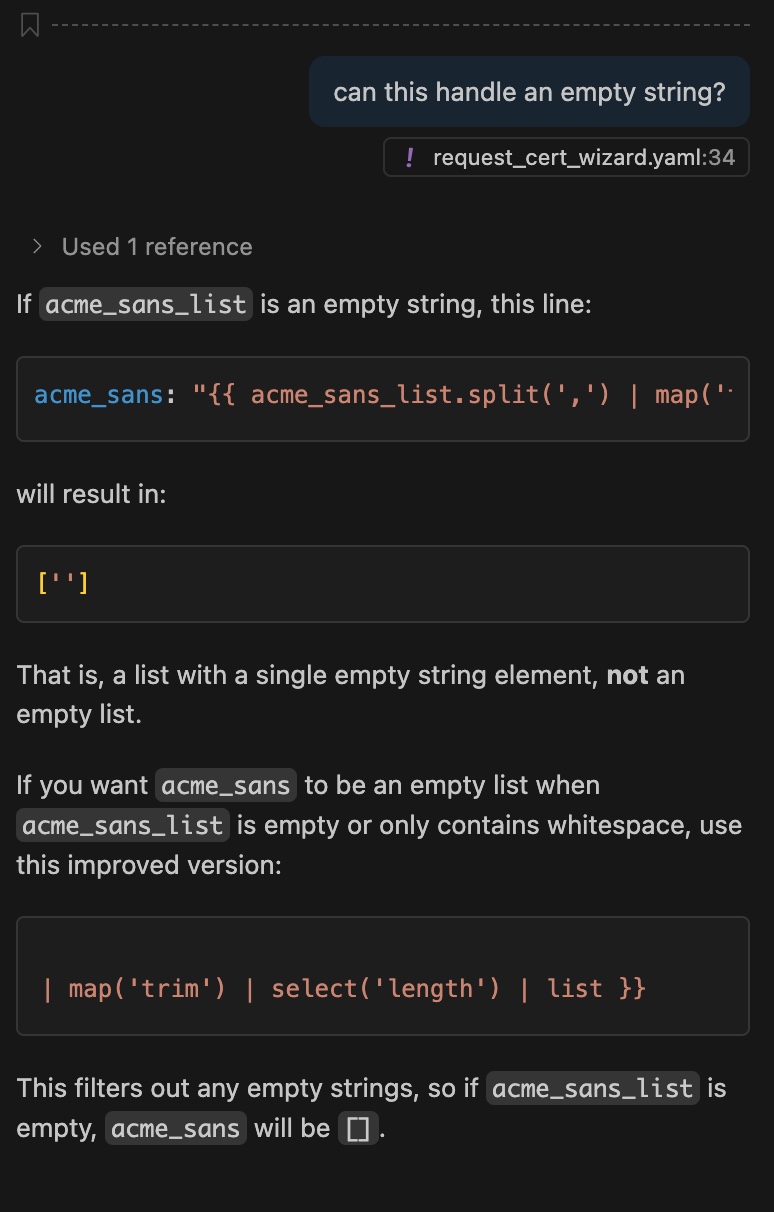

Earlier, I highlighted the fact that AI tools generate average code, so you need to treat it as a starting point, and verify it handles all needed edge-cases elegantly. This suggested code completion illustrates that perfectly, because I was immediately suspicious that it would not fall back gracefully to an empty array if the input was an empty string. Since it’s not just legitimate but quite common to need TLS certs that cover just a single domain name, i.e. have zero Service Alternative Names, or SANs, my code absolutely needed to handle that scenario correctly. So, I continued the conversation with GitHub Copilot and asked “can this handle an empty string?”:

I was glad I asked, because an array with one empty string is not at all the same as an empty array! Thankfully GitHub Copilot offered a suggested fix, so I applied it.

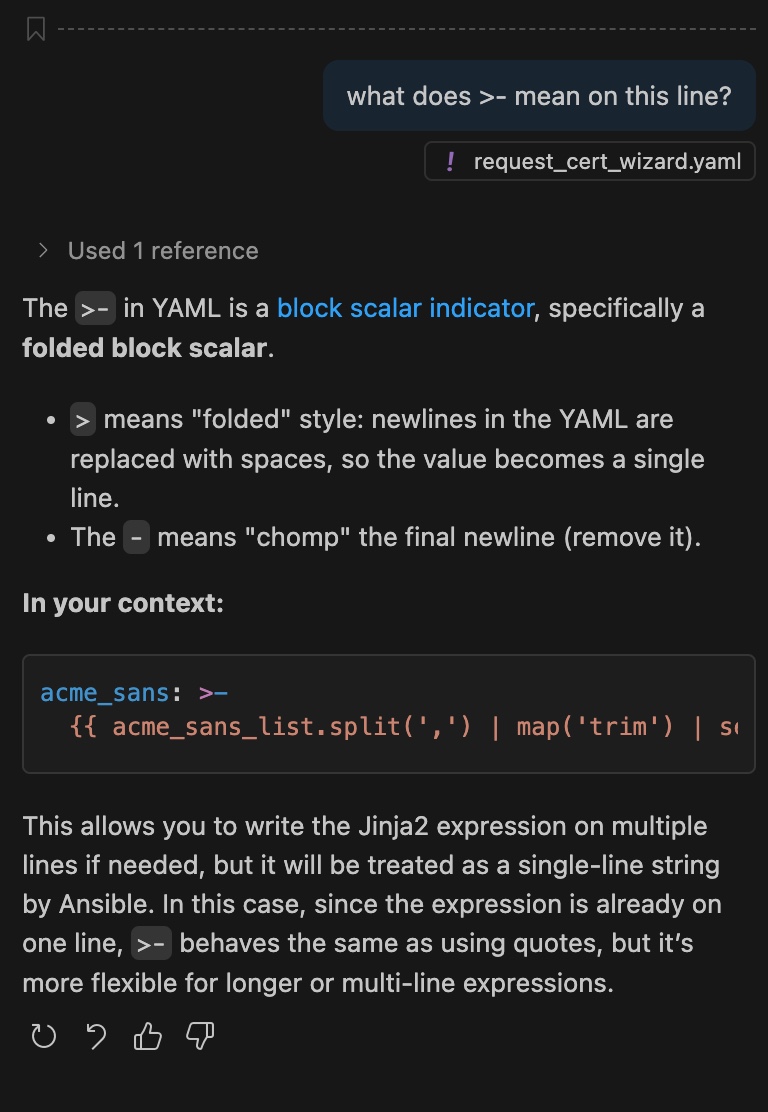

The suggested fix included some YAML syntax that’s outside of what I typically use, and while I had a pretty good idea what it probably meant, I wanted to be sure, so with my cursor on the line I continued my conversation with GitHub Copilot — “what does >- mean on this line?”:

That explanation made perfect sense, so I was now ready to test my new role. Unfortunately, it didn’t go well 🙁

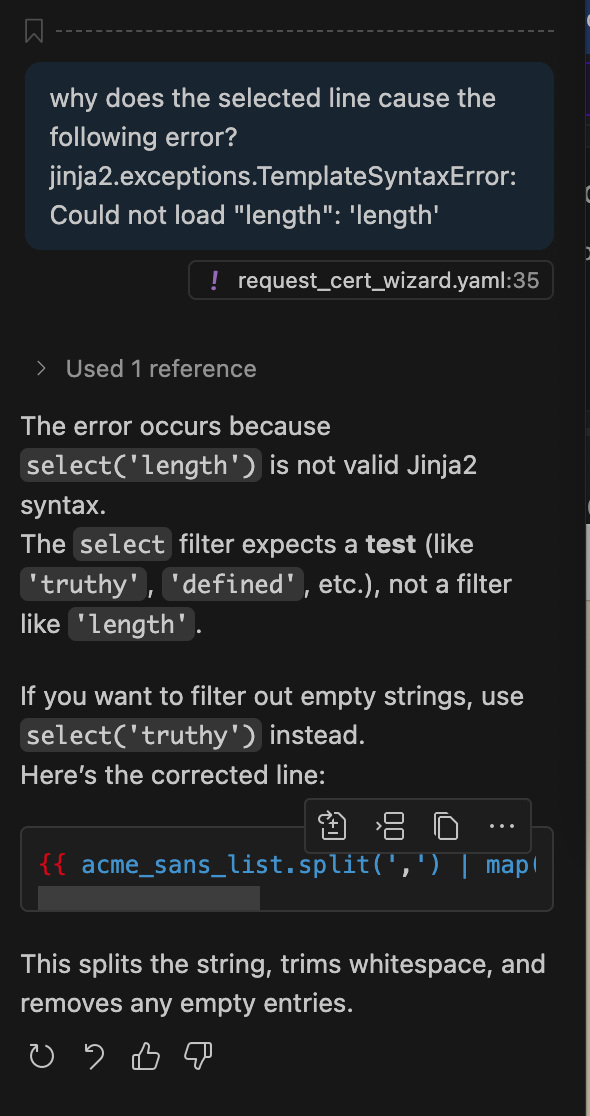

The role triggered an error when applied to my test server, and the error was in code suggested to me by GitHub Copilot. Because I was still new to using AI for coding, my first reflex was to throw the error into a search engine and hope a good Stack Overflow link ranked highly in the results. This didn’t work, so I decided to use GitHub Copilot’s ability to easily add context to my advantage. I selected the lines of code that triggered the error and literally asked why that code produced the give error message:

Both the explanation and the suggested fix made sense to me, though I did chuckle that I needed the help of AI to fix a bug added to my code by AI. 🙂

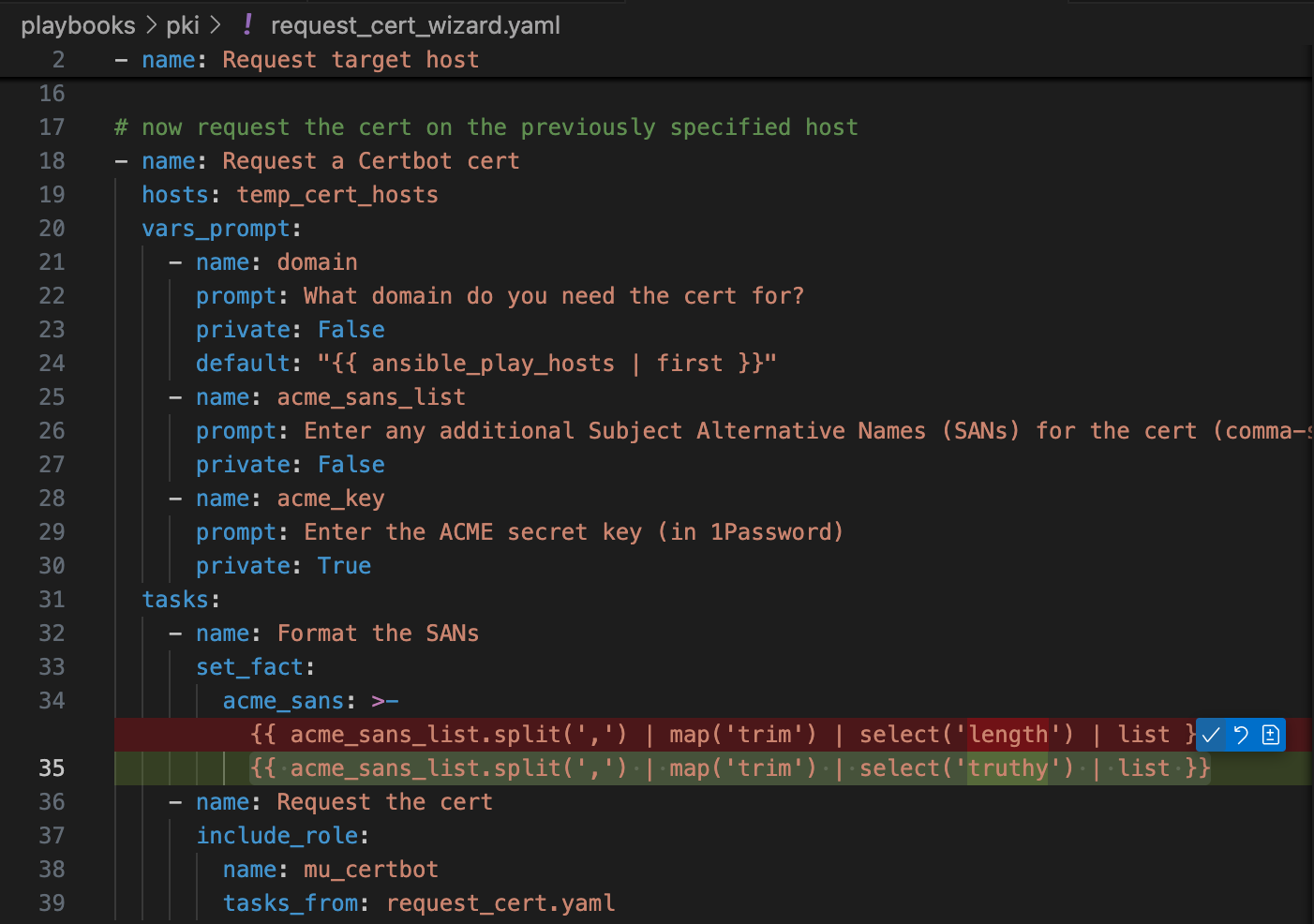

Rather than manually apply the suggested fix, I let GitHub Copilot do it for me. When you hover over a code snippet, three icons appear. The first asks GitHub Copilot to merge the suggestion into your code for you, the second to insert it at your cursor’s position, and the third copies it to the clipboard. Since I needed to change existing code, I clicked on the merge button. This triggers a cool animation of the change rippling through your code, and when it has found all the lines that it thinks need to be changed, it presents them to as a series of diffs for you to confirm or reject:

With that change applied my new role, it worked perfectly! 🎉

Final Thoughts

I was initially very skeptical about coding with AI because the hype smelled like, well, hype. Most if it is, but that’s not the point! Sure, these AI agents can’t do nearly as much as the hype-merchants want you to believe, but they can do a lot, more than enough to be genuinely useful!

If you’ve never used any of these tools before, don’t judge them too quickly. They take a little getting used to, but you’ll learn to adapt your side of the conversation to align with their strengths and avoid their weaknesses. Even now, over half a year into my experiments, I’m still learning new things regularly.

Because these tools can be used in so many different ways, I think everyone’s experiences will be unique, but what I’ve noticed is that the most important things I’ve learned are:

- Make informed decisions about which AI agents you trust which subsets of your data to!

- Always think in terms of conversations, and when you change the subject, explicitly start a new conversation with the AI!

- Use the right tool for the job — integrations really smooth out the friction and minimise distractions. Flipping between windows is a lot more disruptive to your flow than doing everything on the one screen.

- Develop the muscle mental muscle memory to treat pasting a secret into a code file as being every bit as unacceptable as swear at your boss!